How the Usage of Reinforcement Learning is Emerging? Figure Out RL's Importance, Algorithms, and Much More...

The Reinforcement Learning problem involves an agent exploring an unknown environment to achieve a goal. RL is based on the hypothesis that all goals can be described by the maximization of expected cumulative reward. The agent must learn to sense and perturb the state of the environment using its actions to derive maximal reward. The formal framework for RL borrows from the problem of optimal control of Markov Decision Processes (MDP).

The main elements of an RL system are:

- The agent or the learner

- The environment the agent interacts with

- The policy that the agent follows to take actions

- The reward signal that the agent observes upon taking actions

A useful abstraction of the reward signal is the value function, which faithfully captures the ‘goodness’ of a state. While the reward signal represents the immediate benefit of being in a certain state, the value function captures the cumulative reward that is expected to be collected from that state on, going into the future. The objective of an RL algorithm is to discover the action policy that maximizes the average value that it can extract from every state of the system.

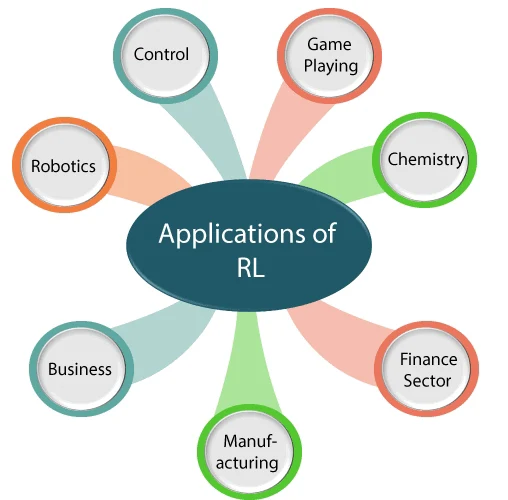

Reinforcement learning has found applications in a variety of fields. The top 10 use cases include:

1. Gaming

Reinforcement learning in machine learning has revolutionized the gaming industry. In fact, it has paved the way for the development of AI that can master complex games and often outperform humans. For example, Google’s DeepMind trained its AI AlphaGo to not just play the game of Go but also—with the help of reinforcement learning—defeat two world champions of Go, Lee Sedol and Ke Jie, in 2016 and 2017, respectively.

2. Robotics

Reinforcement learning trains robots to perform tasks requiring fine motor skills, such as object manipulation, and more complex tasks, like autonomous navigation. Consequently, this leads to the creation of autonomous robots that can adapt to a variety of situations and perform tasks more efficiently.

3. Finance

In the finance sector, this branch of machine learning plays a crucial role in portfolio management and algorithmic trading, optimizing strategies to maximize returns and minimize risk. JPMorgan’s LOXM, for instance, is a trading algorithm that leverages reinforcement learning to execute trades at the best prices and maximum speed.

4. Traffic Control

Its algorithms help optimize traffic signals in real-time, thereby reducing traffic congestion and improving overall traffic flow. This leads to significant improvements in urban mobility and a reduction in the environmental impact of traffic.

5. Power Systems

Reinforcement learning in machine learning optimizes the management and distribution of power in power systems, leading to more efficient and cost-effective energy usage. In fact, it results in more sustainable and reliable power systems, especially in the context of renewable energy sources.

6. Recommendation Systems

Netflix and Amazon use reinforcement learning in their recommendation systems to offer personalized suggestions based on user behavior. This improves user engagement and satisfaction, driving customer retention and revenue growth.

7. Healthcare

In a nutshell, it offers personalized treatment plans based on individual patient data, potentially improving patient outcomes. This proves particularly useful in chronic disease management, where personalized treatment can significantly improve the quality of life.

8. Autonomous Vehicles

Reinforcement learning is crucial in developing autonomous vehicles, enabling them to learn from their environment and make safe, efficient driving decisions. Companies such as Waymo and Tesla are leading the way in autonomous vehicle technology with the help of this technology.

9. Supply Chain Management

This branch of machine learning has also optimized logistics and inventory management in supply chain management. The end result is more cost savings and improved efficiency. Industries with intricate supply chains, like manufacturing and retail, find this especially beneficial.

10. Natural Language Processing

In natural language processing, reinforcement learning improves machine translation, sentiment analysis, and other tasks by learning from feedback and adjusting its strategies. This leads to the creation of more accurate and nuanced language models, thereby improving the quality of machine-generated text.

Reinforcement learning is applicable to a wide range of complex problems that cannot be tackled with other machine learning algorithms. RL is closer to artificial general intelligence (AGI), as it possesses the ability to seek a long-term goal while exploring various possibilities autonomously. Some of the benefits of RL include:

- Focuses on the problem as a whole. Conventional machine learning algorithms are designed to excel at specific subtasks, without a notion of the big picture. RL, on the other hand, doesn’t divide the problem into subproblems; it directly works to maximize the long-term reward. It has an obvious purpose, understands the goal, and is capable of trading off short-term rewards for long-term benefits.

- Does not need a separate data collection step. In RL, training data is obtained via the direct interaction of the agent with the environment. Training data is the learning agent’s experience, not a separate collection of data that has to be fed to the algorithm. This significantly reduces the burden on the supervisor in charge of the training process.

- Works in dynamic, uncertain environments. RL algorithms are inherently adaptive and built to respond to changes in the environment. In RL, time matters and the experience that the agent collects is not independently and identically distributed (i.i.d.), unlike conventional machine learning algorithms. Since the dimension of time is deeply buried in the mechanics of RL, the learning is inherently adaptive.

- EpiSci. Private Company. Founded 2012. ...

- Imandra. Private Company. Founded 2014. ...

- ProteinQure. Private Company. Founded 2017.

- Shield AI Inc. Private Company. ...

- InstaDeep (fka Digital Ink) Private Company. ...

- Intellisense Systems, Inc. n/a. ...

- Companion. Private Company. ...

- Ocean Motion Technologies, Inc.

- Read about RL and Stable Baselines

- Do quantitative experiments and hyperparameter tuning if needed

- Evaluate the performance using a separate test environment

- For better performance, increase the training budget

- Like any other subject, if you want to work with RL, you should first read about it

- It covers basic usage and guide you towards more advanced concepts of the library (e.g. callbacks and wrappers).

- Reinforcement Learning differs from other machine learning methods in several ways. The data used to train the agent is collected through interactions with the environment by the agent itself (compared to supervised learning where you have a fixed dataset for instance).

- This dependence can lead to vicious circle: if the agent collects poor quality data (e.g., trajectories with no rewards), then it will not improve and continue to amass bad trajectories.

- This factor, among others, explains that results in RL may vary from one run to another.

- For this reason, you should always do several runs to have quantitative results.

- Good results in RL are generally dependent on finding appropriate hyperparameters. Recent algorithms (PPO, SAC, TD3) normally require little hyperparameter tuning, however, don’t expect the default ones to work on any environment.

- A best practice when you apply RL to a new problem is to do automatic hyperparameter optimization.

- When applying RL to a custom problem, you should always normalize the input to the agent (e.g. using VecNormalize for PPO2/A2C) and look at common preprocessing done on other environments (e.g. for Atari, frame-stack, …).

Tips and Tricks when implementing an RL algorithm

When you try to reproduce a RL paper by implementing the algorithm, We recommend following those steps to have a working RL algorithm:

- Read the original paper several times

- Read existing implementations (if available)

- Try to have some “sign of life” on toy problems

- Validate the implementation by making it run on harder and harder envs (you can compare results against the RL zoo)

- Markov decision process (MDP)

- Bellman equation.

- Dynamic programming.

- Value iteration.

- Policy iteration.

- Q-learning.

Comments

Post a Comment

"Thank you for seeking advice on your career journey! Our team is dedicated to providing personalized guidance on education and success. Please share your specific questions or concerns, and we'll assist you in navigating the path to a fulfilling and successful career."